A BRIEF HISTORY OF

ARTIFICIAL INTELLIGENCE

The history of Artificial Intelligence (AI) is marked by technological breakthroughs and pivotal moments that have profoundly shaped our understanding of machine learning,

decision-making, and human-machine interaction.

Since the first concepts in the 1950s, AI has evolved from a theoretical discipline to a key technology that touches nearly every aspect of modern life.

The history of AI is a fascinating journey from early theoretical concepts to today’s highly advanced systems. It reflects humanity's desire to create intelligent machines while highlighting the need to develop and use these technologies responsibly.

Early Concepts and the Birth of AI

(1950s and 1960s)

AI’s beginnings trace back to the mid-20th century when mathematicians and computer scientists explored the possibility of creating machines that could think like humans.

A key pioneer was the British mathematician Alan Turing, who asked in his 1950 paper "Computing Machinery and Intelligence": "Can machines think?" He introduced the Turing Test to determine whether a machine could be distinguished from a human through language-based interaction.

Another milestone was the 1956 Dartmouth Conference, organized by John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon. This event is considered the official beginning of the AI research field. The term "Artificial Intelligence" was coined here, along with a vision to create machines capable of intelligent tasks like problem-solving, learning, and language processing.

ELIZA and Early Language Processing (1960s)

In the 1960s, practical applications of AI concepts began, especially in language processing. In 1966, Joseph Weizenbaum developed ELIZA, a program that simulated a psychotherapeutic conversation by using simple pattern recognition algorithms to respond to user input.

The name "ELIZA" refers to Eliza Doolittle, the main character from the play (and later film) "My Fair Lady," based on George Bernard Shaw's play "Pygmalion." In the story, Eliza, a simple flower seller with a strong accent, is trained by Professor Henry Higgins to adopt refined speech, allowing her to pose as a cultured lady. Weizenbaum chose this name because, like Eliza Doolittle, his program could simulate a deeper ability, appearing as if it could engage in sophisticated conversation without truly understanding language. ELIZA imitated a capability it didn’t genuinely possess, merely offering pre-prepared responses to certain keywords.

Although ELIZA lacked deep intelligence, it demonstrated how machines could create the illusion of human interaction. This laid the foundation for later development of advanced language models that rely on more complex algorithms but still build on communication patterns.

The Era of Expert Systems

(1970s and 1980s)

In the 1970s and 1980s, AI research focused on expert systems designed to assist or replace human experts. These systems stored structured knowledge in the form of if-then rules to make decisions.

The goal behind expert systems was to transfer the problem-solving abilities of an expert to a machine using explicit knowledge and logical reasoning.

The first significant expert system was DENDRAL, developed in 1965 by Edward Feigenbaum and Joshua Lederberg at Stanford University. DENDRAL was specifically created to help chemists identify unknown organic molecules. The system analyzed molecular spectra and used a rule-based method to determine possible molecular structures.

DENDRAL is considered groundbreaking because it automated the decision-making process for chemists and relied on an extensive knowledge base in chemistry. By using algorithms, DENDRAL could deduce the most likely molecular structure from data, easing the chemists’ complex task of structural analysis.

Based on insights from DENDRAL, the MYCIN medical expert system was developed in the 1970s. MYCIN was designed to help doctors diagnose blood infections and recommend suitable antibiotics. It was particularly useful in situations requiring quick action and precise decisions.

MYCIN used a set of if-then rules based on medical knowledge to identify specific bacteria causing infections. It also provided guidance on dosage and antibiotic selection based on symptoms and test results.

Despite their success, expert systems relied on hard-coded rules and explicit knowledge, limiting their flexibility. These systems operated on Boolean logic, where variables could only be "true" or "false," making them unsuitable for dynamic, complex environments where new or unforeseen situations arose.

Setbacks and Renewed Momentum

(1980s to 1990s)

In the late 1980s, AI research entered a period of disillusionment known as the "AI winter." Many of the high expectations raised by early successes with expert systems and rule-based approaches couldn’t be met.

These systems reached their limits, as they heavily depended on explicit knowledge and hard-coded rules. In dynamic, real-world environments with new situations or data, such systems often lacked flexibility. These limitations led to a decline in AI investment and interest, with many researchers turning to other technologies.

Despite this setback, the 1990s saw significant progress in AI research. A key development during this time was the rediscovery and advancement of neural networks. These models, inspired by the functioning of the human brain, were theoretically introduced in the 1950s but couldn’t be practically applied due to limited computing power and data.

In the 1980s, neural networks were further developed by researchers like Geoffrey Hinton, David Rumelhart, and Ronald Williams with the introduction of the backpropagation algorithm. Backpropagation allowed errors in neural networks to be corrected, enabling the networks to "learn."

Neural networks consist of multiple layers of artificial neurons connected to each other. The core idea is that these networks can process data by adjusting weights between neurons and identifying patterns in the data. What makes neural networks special is their ability to learn from data, rather than relying on explicit rules like expert systems. This flexibility made them more suitable for working in complex, dynamic environments.

With the availability of more powerful computers and large data sets generated by the internet and digitalization in the 1990s, AI research found new momentum. Instead of relying on predefined rules, research shifted towards statistical models and machine learning. These models could identify patterns and make predictions from large datasets without explicit human intervention.

A major breakthrough of this time was IBM's chess computer Deep Blue defeating world chess champion Garry Kasparov in 1997. Deep Blue didn’t use neural networks but a combination of brute-force search and sophisticated heuristics to find the best moves in chess. Nevertheless, this success showed the world that machines could compete with the best human players in strategically complex games. It was a symbolic moment that renewed confidence in the potential of AI.

The Era of Deep Learning and Neural Networks

(2000s to 2010s)

With the rise of deep learning in the early 2000s, AI underwent a transformation. Deep learning, based on deep neural networks, enabled machines to recognize patterns in large datasets and handle complex tasks such as image and speech recognition. Advances in computer vision allowed machines to identify objects in images and videos.

In the early years of neural networks, however, there were significant challenges. One of the biggest issues was the vanishing gradient problem, which made it difficult to train deep neural networks with many layers effectively. This problem made it almost impossible to update the weights in the lower layers of the network, hindering the learning of deep features. This obstacle held back the development of more powerful neural networks for a long time.

The breakthrough of deep learning was made possible by the availability of large datasets and powerful GPUs. Neural networks could learn increasingly deep structures, leading to significant advances in machine vision, natural language processing, and autonomous driving.

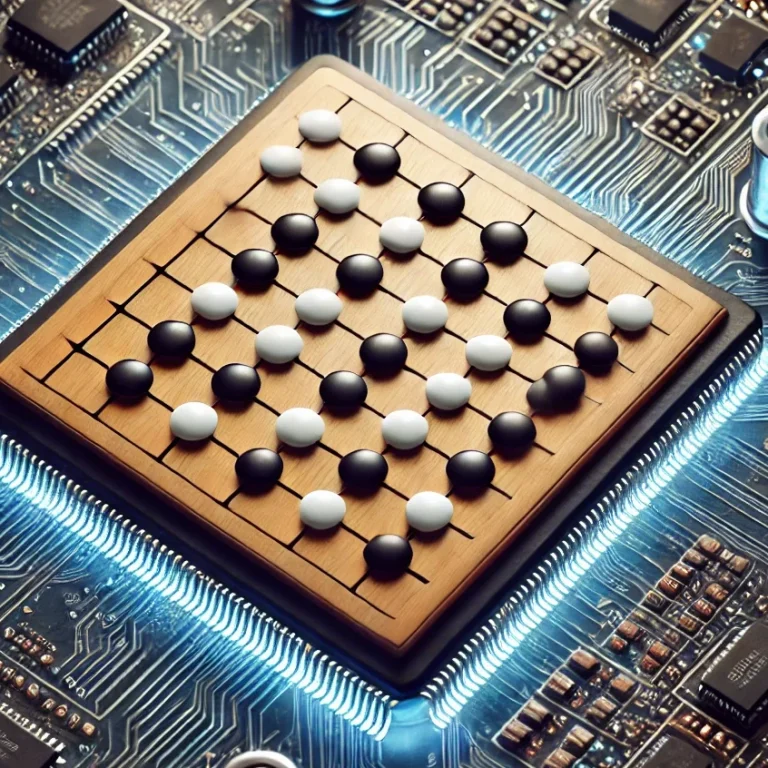

A notable example was DeepMind's AlphaGo, which defeated the world champion in the game of Go, Lee Sedol, in 2016. Go is considered one of the most complex board games, and AlphaGo’s success demonstrated AI’s ability to simulate strategic thinking.

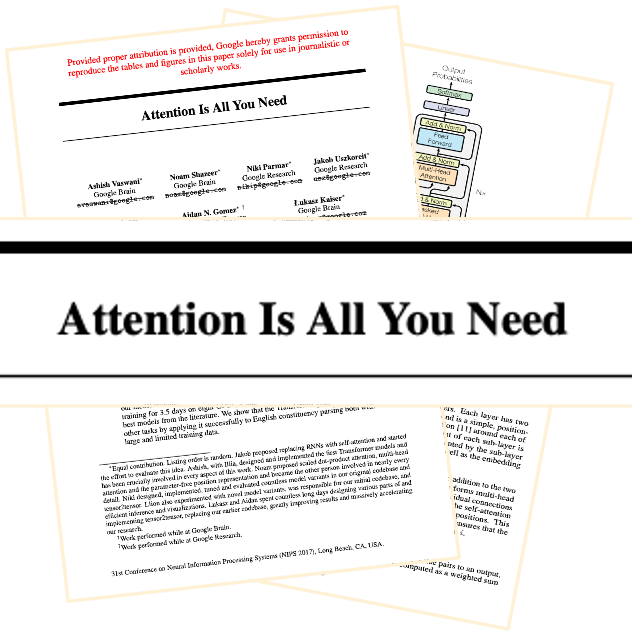

The 2017 Breakthrough: "Attention is All You Need" and the Revolution of Transformer Models

The year 2017 marked a turning point when Google published the research paper "Attention is All You Need." This introduced the Transformer architecture, which has become the foundation for modern AI development. Previously, many advanced NLP models were based on recurrent neural networks (RNNs) and Long Short-Term Memory (LSTM) models, which struggled to process long text sequences.

The Transformer differed by using attention mechanisms, enabling the model to focus on different parts of a text simultaneously, regardless of their position. This allowed it to recognize complex relationships and connections in long texts.

The self-attention mechanism, a central concept of the Transformer, made it possible to understand the context of each word comprehensively and generate more accurate predictions.

The publication of this paper opened a new era in machine learning and was instrumental in advancing natural language processing.

Modern AI and the Era of Large Language Models (2020s)

In the 2020s, Artificial Intelligence (AI) ushered in a new era of large language models and natural language processing.

Especially notable were the Transformer models developed by OpenAI, such as GPT-3 and later GPT-4, which set new standards in a machine’s ability to generate human-like text and answer complex questions accurately.

In 2022, OpenAI marked another milestone with the introduction of ChatGPT, based on the GPT-3.5 architecture. ChatGPT significantly expanded AI’s natural language capabilities, particularly through the use of Reinforcement Learning from Human Feedback (RLHF).

This technique enabled the model to respond to human feedback and continuously improve its answers, providing contextually relevant and meaningful responses.

ChatGPT demonstrated its versatility by not only answering complex questions but also generating creative texts and explaining technical concepts in an understandable way.

Thanks to its user-friendliness and natural language communication capabilities, ChatGPT quickly gained popularity and was used in numerous fields such as customer support, content creation, and education.

The introduction of this model showcased the immense potential of AI in everyday applications, setting new standards for human-machine interaction by making AI accessible across various aspects of life.

The Future of Artificial Intelligence

Despite tremendous progress, AI still faces challenges. Ethical questions, bias in AI systems, data privacy, and transparency are central issues in research and development. It remains to be seen how AI systems will be designed to be used responsibly and contribute to humanity's well-being.

Another significant area of research is the development of Artificial General Intelligence (AGI), which would be able to handle a wide range of tasks as well as or better than humans.

While today’s AI models, known as Artificial Narrow Intelligence (ANI), are specialized for specific tasks, AGI could respond flexibly and intelligently to various challenges. There is also the concept of Artificial Superintelligence (ASI), which would surpass human intelligence by a significant margin.

You can find more in-depth information on the concepts of ANI, AGI, and ASI in a dedicated deep dive.

i.know Conversations

"From Turing to Transformers:

A Brief History of Artificial Intelligence"

©Urheberrecht. Alle Rechte vorbehalten. Datenschutz. Impressum.

Wir benötigen Ihre Zustimmung zum Laden der Übersetzungen

Wir nutzen einen Drittanbieter-Service, um den Inhalt der Website zu übersetzen, der möglicherweise Daten über Ihre Aktivitäten sammelt. Bitte überprüfen Sie die Details in der Datenschutzerklärung und akzeptieren Sie den Dienst, um die Übersetzungen zu sehen.